Rebuilding records from scraps and fragments, says S.Ananthanarayanan.

The science of archeology uncovers utensils, dwellings, large structures and the layout of ancient settlements. And where there are records of written matter, the ancient civilisations come ‘literally’ to life.

Yannis Assael, Thea Sommerschield, Brendan Shillingford, Mahyar Bordbar, John Pavlopoulos, Marita Chatzipanagiotou, Ion Androutsopoulos, Jonathan Prag and Nando de Freitas, from Ca’ Foscari University of Venice, Harvard University, Athens University of Economics and Business, University of Oxford and Deep Mind, an AI company based in London, describe in the journal, Nature, how Artificial Intelligence was used, and could be used, to help recover Greek texts from damaged and obscured remains of ancient writings in Greek.

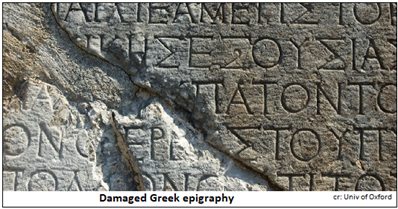

Written records in the form of papyrus or palm leaf are of comparatively recent periods. The more ancient, which have survived, are those that were carved into stone, engraved in metal, or in pottery, as in cuneiform. And much of these records, naturally, are the worse for wear and age. Letters are hence damaged, or whole words and phrases are missing. Many records are not in the places where they were created. And, as epigraphy, as records on durable materials are known, do not contain organic matter, they cannot be carbon-dated to know their antiquity.

Nevertheless, scholars of ancient history, experts of the languages, and scientists, including mathematicians, have been able to work out reasonably complete texts in many cases. The task is onerous, the paper says, and “involves highly complex, time-consuming and specialized workflows.” For instance, to guess what missing matter could be, scholars use other texts of similar construction or context. Such portions of texts were initially what was within the researchers’ knowledge, and more recently, as found by computer aided ‘searches.’ These processes, however, were slow and unreliable, they could miss out the appropriate leads or confuse the search, the paper says.

And, as the purpose of reconstruction is to build historical narrative, it is important to know the place where the record was created, as well as the date, as accurately as possible. If the record had been moved, its place of origin needs to be deduced from other evidence, such as letter forms or the dialect. And while the idiom or context can fix the date of production, it is not without uncertainty.

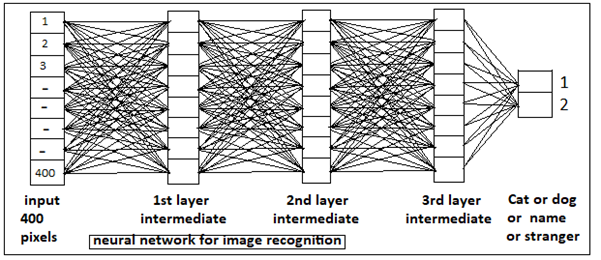

In the paper now reported, the authors substitute a deep neural network, a computer based, mass-data processing technique, to take the place of the usual methods. Neural networks are computer processes that mimic what we believe the animal brain does, to derive meaning from the data that it receives from the senses. In the case of the computer, the programmer creates a fast and accurate mechanism to carry out calculations or sort data. The animal brain, however, has no ‘programme’ to follow, it needs to work out its own process. A form of learning, based only on the data that comes in, and by refining the process, based on results.

Discovering the rule that is hidden inside data, typically, involves dealing with a very large data-set, repeatedly, through a powerful computing arrangement. While, large data-set is there, when an infant begins to receive signals of the environment through her senses, the animal brain has a vast array of computing units in the form of nerve cells. Which accounts for the unequalled perception and movement that organisms are capable of. The neural network of computers follows this method of the brain, using the very high capacity that modern computers have, to create systems that automate learning the ways of decision making, and processes of interpreting data.

An illustrative example would be image recognition. The human, and often other animal capacity to recognise people is phenomenal. At the same time, there is no method to program a computer to make people apart, with reasonable accuracy. But a neural network is able to ‘learn’ how to do it. For example, a network that can tell pictures of dogs as different from pictures of cats, is exposed to a vast data-set of labelled photographs of dogs and cats, labelled, in the sense that each picture is marked as a dog or a cat. When presented with a picture, the network typically, breaks the picture into smaller frames, and then acquires features, like the brightness and colours of the frames, and evaluates a finding, based on a set of multipliers of the different values. Every time the finding differs from the label, the network makes changes of the multipliers, to bring the finding closer to the label. After thousands, of iterations, and trials with even millions of pictures, the system gets pretty good at identifying dogs and cats. And an extension is to consider a set of feature values from a set of pictures of each person, to identify the persons themselves.

Training’ the system, of course, calls for a very large, labelled data-set. And repositories of such images are available, sometimes to buy. Systems that are tuned to criteria, like speed, or accuracy, are then built, and trained using public data-sets. The system can be used to help a mobile phone recognise its rightful user, for instance, or an entry point to recognise authorised visitors.

A variation of the process it to train the system to predict a missing word in a sentence. Here, words that follow, or precede, a set of other words, are located from a ‘dictionary’ of possible words, based on the pattern discovered, or learnt, from a massive data-set of examples. In the case of modern languages, there are ample sources of digitised text to help ‘train’ the system. The way word processors can ‘auto-complete’ words or sentences is an example of such a system in action.

Ancient Greek text

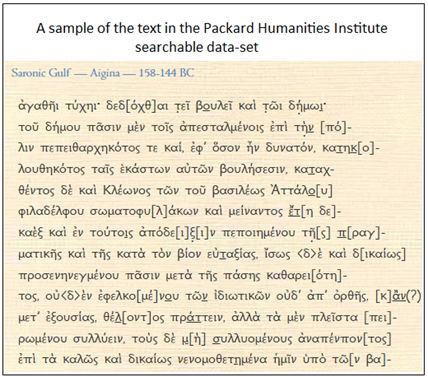

This is not the case with ancient languages not in current use. In the case of Greek epigraphy, fortunately, there is an extensive collection of text from ancient Greece, complete and annotated by scholars of Cornel and Ohio State Universities - supported by the Packard Humanities Institute, in California. The data-set, which has been created to assist historians, consists of the transcribed text of 178,551 inscriptions, the paper says. And the data-set is ‘searchable’, to that relevant portions can be readily located. And then, it contains information of the location as well as precise date of creation, as worked out by scholars.

The team hence processed this information into a machine-readable form and fed a set of 78,608 inscriptions to a neural network system, named ITHACA – after the island in Homer’s Iliad. The network is called a ‘deep neural network’, because the network consists of several layers, with as many sets of multipliers, and each layer processing the results of the previous layer.

The system was created to work collaboratively with human experts. In place of providing single predictions, the system outputs a set of 20 possible predictions, ranked by probability. The prediction is then paired with the contextual knowledge of the human experts. And the features of the inputs, that contributed most to the predictions are identified.

Along with identifying text, ITHACA also pinpoints location – again with a ranked list of possibilities – out of a range of 84 regions. And then, the dates, the dates between 800 BC and 800 ADS are put into buckets of 10 years each.

“Historians may now use Ithaca’s interpretability-augmenting aids to examine these predictions further and bring more clarity to Athenian history,” the paper says.

------------------------------------------------------------------------------------------ Do respond to : response@simplescience.in-------------------------------------------