Sensing the environment with the help of sound is not the exclusive domain of bats and dolphins, says S.Ananthanarayanan.

We become conscious of the environment through our senses – and the principal human senses are vision, touch and sound. Sight reveals colour and great spatial detail while touch makes us aware of heat and texture. Hearing is both an important medium of perception and in many species is the chief means of communication

James A Traer and Josh H McDermott at the Department of Brain and Cognitive Sciences, MIT, in their paper in the journal, Proceedings of the National Academy of Sciences of the USA, point out that the signal that the ears receive is a mixture of information from many sources and there is considerable distortion and duplication of sound waves before they are heard. The kind of distortion, however, depends on the geometry of the space around us, they say, and their research is about the mechanics of how biological systems are able to segregate mixed sources and assess surroundings.

The principal difference in the way the eyes and the ears function is that the eyes need to move and face the source of light, but the ears react equally to sound that comes to them from all directions. The reason, of course, is that light, for practical purposes, travels in straight lines, while sound waves, whose wavelength has dimension of metres, passes freely around corners. And then, sound is also efficiently reflected by most surfaces. The result, however, is that the eyes are able to focus on specific objects and create a detailed map of the field of view, which is possible only to some extent for the ears.

Apart from eyes being provided with lenses that focus objects clearly on the retina, which aids estimate of distance, the fact that we have a pair of eyes enables us to see ‘in depth’, or to make out what objects are near and which ones are further away. An auditory parallel of such ‘stereoscopic’ vision is ‘stereophonic’ sound, where the fact that we have two ears helps us make out where different sounds originate, so long as we hear the sounds directly, and not second hand, by echoes, or via loudspeakers. In the case of depth vision, the effect is thanks to the slightly different views that each eye sees, because they are placed a few inches apart, and the brain learning to make use of the perspective. But in the case of sound, the separate ears receive, from each source, sound waves that are either a little louder or softer, or a little out of phase, which is to say, the stage of wave motion, depending on the distance from the ears. When the sounds are equally loud or in phase, of course, we sense the sound as coming from directly before or behind us. Even such limited judgment, however, is often obscured by the ears also receiving sound that has been reflected off other objects or surfaces, which obscures the source and causes ‘reverberation’. Reverberation is an effect that can even result in speech being unintelligible because the echo of each word from the walls and roof a large hall runs into the sound of the following word!

The study of the MIT duo was regarding the characteristics of the ever-present reverberation of sounds in places which people usually inhabit and what part of this it is that people can actually discriminate, which helps them in the oft observed ability to isolate the sources of sounds. The study reveals that, based on the experience of reverberation in known places, the brain is able to separate the sound heard by the ears into contributions from the source and the environment. This helps recognition of sounds and also provides information about the surroundings. In the case of the bat or dolphins, the use of sound is much more effective and the animals’ ears are the chief organ of navigation. The difference in these cases is that what the animals listen to are not sounds created by objects in the surroundings, but the distinct echo of a high pitched ‘click’ that the animal itself generates. There would, of course, be an element of secondary echoes in the return sound that is heard, and this may contribute to enhancing the information received, in the manner that the MIT researchers have discovered in the case of human subjects of their study

The first step in the study conducted was to see if there were statistical regularities in the reverberation space of the normal aural environment of people. If there was such a ‘normal’ pattern, this may explain the observation that the brain is able to filter out the distortion caused by reverberation and purify the meaningful signal. The study hence first identified places which could be objectively considered a ‘normal’ environment, by tracking a group of volunteers with the help of random text messages 24 times a day for two weeks. The volunteers were required to respond by stating the place where they were when they received the message, and this generated a starting list of 301 locations, in the Boston metropolitan area, in 271 of which it was possible to conduct further study.

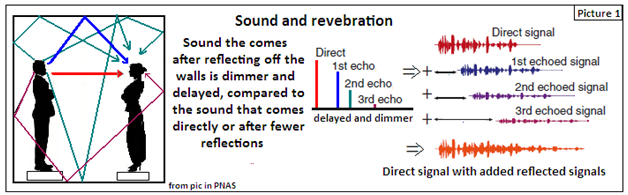

The echo and reverberation characteristics of each of the identified places were then measured. This was done by playing a fixed noise signal through a speaker, one metre away from a microphone which recorded what a person would hear. The paper explains that what is expected to be heard is first the sound played, and in quick succession, even running into the first sound, the first, second and third, or more, echoes, as shown in the picture 1, below.

Trials at each of the 271 places resulted in data of the nature of sound energy received by a listener at these places, particularly the timing and amplitude of the echoes and the falling off of the loudness, as the sound died out. The result of the trials, the study says, was to find that there was a common feature of the way the later part of the sound decayed, a result, the study says, apparently not documented so far. This finding, that the reverberation that arises when sounds are generated in places which people frequent has common characteristics, leads to the possibility that the brain, with experience, learns these patterns and can then devise a way to filter the distortion out.

The study thus shows that the auditory system has a working method of using past experience to filter out the distortion caused by reflections from surrounding which the listener is familiar with. This helps her both to identify the source of the sound and also gives her an idea of ‘normal’ surroundings, when the nature of distortion by echoes is like what is normally experienced. The ability to take experience into account when data is distorted or insufficient parallels what happens in the visual field too (see Box).

Seeing in depth

It is the pair of slightly different images that are seen by each eye, which the brain puts together to create a 3 dimensional image of the scene before the eyes. But still, we routinely see things with only one eye, even while driving, for instance, and we manage to make correct judgments of the distances. This is possible because the brain fills in ‘from experience’, when the visual signals are inadequate, and generally does not go wrong.

One case where the brain is not able to compensate is when we take a photograph. The camera has only one lens and only one image is captured and depth information is hence lost in a photograph. This is the reason that photographs of things that have much depth, like a landscape, with of nearby houses and distant mountains, look flat and lifeless in a photograph.

But such photographs can be ‘brought to life’ by deceiving the brain to enter the act and fill in depth information where none is there in the photograph. This is done by viewing the photograph not with both eyes, but with one eye shut. The brain then does its bit and the photograph springs to life!

------------------------------------------------------------------------------------------