The current capability in massive computing can speed up the progress of drug design, says S.Ananthanarayanan.

Knowing the body biochemistry is one way of designing therapy to deal with medical conditions. Most known drugs or herbal remedies, however, were not discovered in this way but after centuries of trial and error. Modern medicine has analysed traditional cures to arrive at the functional chemicals, which has helped drug synthesis and also to understand physiology. Such wisdom, however, does not help the development of new drug remedies, which must rely on intense, and largely blind assays of the great variety of preparations or substances and their effects on pathogens or body processes.

A way to accelerate the process has been the use of machine learning and computer based artificial intelligence, which can find patterns in the features of known effective substances and screen out the less promising lines of inquiry. An improvement in this strategy, which was used to scan 72 million compounds and create a tractable list of promising candidates for anti-cancer application, has been reported by researchers Artur Kadurin, Alexander Aliper, Andrey Kazennov, Polina Mamoshina, Quentin Vanhaelen, Kuzma Khrabrov and Alex Zhavoronkov, from Moscow, Tatarstan, St Petersburg and Dolgoprudny in Russia, Truro, in Cornwall, the University of Oxford and John Hopkins University.

Their paper in the journal, Oncotarget, begins with the words, “despite the many advances in biomedical sciences, the productivity of research and development programs in the pharmaceutical industry is on the decline.” The paper explains that nearly 90% of clinical trials for all disease categories end in failure, and almost 95% fail in the case of cancer. The main reason, the paper says, is that trials have to start without a hint of where to look and the huge effort needed leads to high prices of cancer drugs.

The methods of machine learning have been strikingly successful in trend analysis, based on just some known examples. Areas of application include analyses of customer trends, improving availability of products and services, traffic control, health administration and automated diagnosis. Machine learning is now capable of voice and image recognition, even surpassing human ability, and has been successful in running driverless motor vehicles in a busy street.

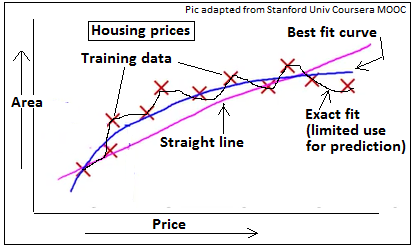

The basic method used is to make rapid calculations with a set of known data to find a mathematical formula that fits their distribution. The formula is then tested on some more known examples and if it qualifies, then it is likely to make correct predictions with unknown data too. An elementary example, from a popular course on machine learning that is offered online by Stanford University, is to consider the prices of apartments as a function of the covered area, the number of rooms, bathrooms, windows, location, etc. Computer aided analysis could discover a relationship among many of these, which could predict the price of apartments, given its features, to help sellers or buyers.

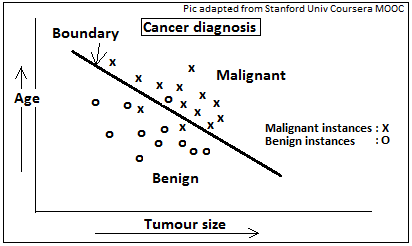

Another application would be to connect the tumour size, age of the patient and some other features, in the case of cancer, with malignancy. The formula, as developed from known cases, could be regulated to predict malignancy either in most malignant cases, or rarely to make a mistake, depending on the priority.

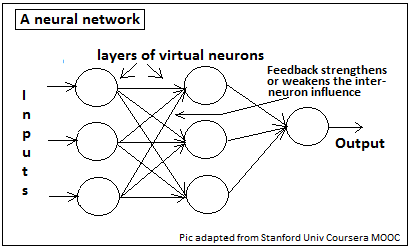

While predictions like this were first made purely by varying and refining the formulae used, a process which is possible with large data with the help of computers, it was soon realised that the animal brain seems to perform a lot better through a different angle of attack. In playing a game of chess, for instance, one way a computer could go about it is by working out all possible moves and counter-moves, by both players, from a given board position, and then choosing what move to make. It was seen however, that human players were able to do better than even powerful computers that planned several moves ahead. It was then realised that the human brain did not follow the brute force method of the computer, but seemed to take in some features of the chess board position, which may seem to be unrelated, and use these, and experience, to play in a more effective way. And the mechanism by which the brain did this was by charting the manner in which arrays of brain cells reacted to the different inputs of features of the chess board. Given a set of responses by brain cells, the same responses were either strengthened or weakened, depending on their outcome. Over a series of actual instances, the brain adapts to making more effective responses and continues to learn with experience. This is the mechanism that leads not only to good chess playing but also how a child internalises the nuances of a language faster than years of study by scholars!

Neural networks

Computers were now programmed to simulate this architecture by creating virtual neurons, or software that behaved like brain cells. In a simple instance of recognising a single feature, the feature could be presented to a single virtual neuron. The neuron responds at random, from a pair of choices. If the answer is correct, there is feedback that adds to the probability of that response, and if the answer is wrong, the feedback lowers the probability. We can see that this device would soon learn, through a random process, to consistently make the correct response.

We can also see that the same process could deal with a choice of more than only two responses, and we could also have a brace of artificial neurons that would send their responses to another set of neurons, and so on. By creating this kind of network, and sending feedback from layer to layer, it is possible to develop a computer system that can identify an image as being that of a car or a pedestrian, for instance, and then if the pedestrian was a man or a woman!

In applying this process to drug design, a large number of features of many candidate substances, whose therapeutic value is known, is presented to neural networks, to generate responses of whether the substances are useful in cancer therapy or not. The feedback of whether the responses were correct is the process by which the network ‘learns’ the importance of different combinations of features. Large computer facilities are able to simulate extensive neural networks and train the system with a range of features, to filter out substances of doubtful potential and reduce the load at the final stages of drug research.

Adversarial model

While these applications of machine learning deal with classification, even discovering classes, or discrimination, other applications are of generating instances that should lie within a given or discovered class. These applications are useful in image generation, synthesising a texture or cleaning signals of noise. An application in drug research would be to go from characteristics of known curative agents to the specifications for new drugs.

The authors of the Oncotarget paper made use of available databases of drug characteristics and an advanced machine learning technique where one part of the program tries to generate new instances that cannot be distinguished from a base set while another part (as an ‘adversary’) works to uncover these generated instances. The result is an optimum of similarity with novelty, or new and effective drug templates.

The paper describes a trial where the system was trained with the features of 6,252 compounds that were effective against MCF-7, a recognised line of breast cancer cells. The system was then used to screen 72 million compounds from a freely accessible database of compounds and small molecules. The properties of the resulting set of 69 compounds, culled out of the 72 million, were then assessed with the help of another database of compounds that had anticancer capability. It was found that several of the 69 compounds were known as anticancer agents and most were related to a group of highly effective cancer drugs.

This is a significant achievement, that a computer trial has isolated a biologically relevant subset out or a very large collection, an exercise that would not have been possible through laboratory methods. While nature, after centuries of evolution, provides us with a nearly infinite variety of substances, we now have a machine learning procedure that helps us sift through the mass to find those that serve specific purposes.

------------------------------------------------------------------------------------------