Machines have been taught to learn and excel their trainers, says S.Ananthanarayanan.

Card games like bridge or poker, where opponents use deception, introduce another level of complexity. But the pinnacle was programming a computer to match grand masters in playing chess. When this bastion fell, the challenge shifted to the game of GO – an ancient Chinese board game (see box) that has even greater complexity. This too, was mastered in 2016, when the British Artificial Intelligence company, DeepMind Technologies, created the programme, AlphaGo, which defeated Lee Sedol, the 18-times world champion. David Silver, Julian Schrittwieser, Karen Simonyan, Loannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, Yutian Chen, Timothy Lillicrap, Fan Hui, Laurent Sifre, George van den Driessche, Thore Graepel and Demis Hassabis, all from DeepMind, now report in the journal, Nature, an improvement, named AlphaGo Zero, which achieves “a long-standing goal of AI – an algorithm that self-trains to superhuman proficiency without expensive human-input”.

This ability, of the algorithm to train itself, is of great importance, as machine learning is now used in a variety of commercial, industrial and scientific fields, all of which face high costs in training the AI machine. Applications include analyses of customer trends, improving supply of products and services, traffic control, health administration, automated diagnosis and drug discovery, voice and image recognition running driverless motor vehicles in a busy street.

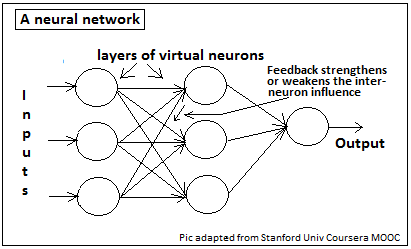

The basic method uses is to fit complex, but known data to a mathematical formula. The formula is then tested on other sample data and if it qualifies, it could predict unknown data too. While predictions were improved with massive data sets, it was soon realised that the animal brain does even better through a different angle of attack. In playing a game of chess, for instance, a computer could work out all possible moves and counter-moves, by both players, from a given board position, and then choose the move to make. Human players lack such ability, but they still defeat even powerful computers. It was realised that the human brain does not follow the brute force method of the computer, but takes in some features of the chess board position, which may seem to be unrelated, and use these, and experience, to play in a more effective way. The brain charts the different responses of brain cells to the features of the chess board, and the outcomes. Given a set of responses, the responses are strengthened or weakened according to the outcome. Over a series of actual instances, the brain adapts to making more effective responses and continues to learn with experience. This mechanism leads not only to good chess playing but is also the way a child internalises the nuances of a language faster than years of study by scholars!

Neural nertworks

Computers were now programmed to simulate this architecture by creating virtual neurons, or software that behaved like brain cells. In a simple instance of recognising a just one feature, the feature could be presented to a single virtual neuron. The neuron responds at random, from a set of choices. If the answer is correct, there is feedback that adds to the probability of that response, and if the answer is wrong, the feedback lowers the probability. We can see that this device would soon learn, through a random process, to consistently make the correct response. A brace of artificial neurons that send responses to another set of neurons, and so on, could deal with several inputs with greater complexity. A network like this could learn to identify an image as being that of a car or a pedestrian, for instance, and if a pedestrian, whether a man or a woman!

In learning to play games, AI systems are designed to take in different features, like immediate threats, potential threats, a metric to evaluate positional advantage, devices to evaluate patterns in opponents’ moves, relative values of costs to be paid for gains and so on. The system then plans a series of responses to possible future moves of the opponent and could evaluate the options against a data base of past games. Alternately, the system could shadow real games of expert players and evaluate proposed moves by comparison with moves selected by the experts. One strategy to filter moves is to evaluate a move till it is found to be inferior to a previously evaluated one. The evaluation itself may be by traversing the tree that is formed when each move can have different responses, each of which can have several responses, and so on. Or there could be ways of assigning approximate values to moves. A combination of both is the Monte Carlo Tree Search, which employs statistical methods.

AlphaGo, which beat Lee Sedol in four out of five games, employed a pair of neural networks – one to predict the probability of opponent moves and another to evaluate the potential of a board position. The first network was trained with the help of human experts and the second by simulating the game after the given position. These two networks gave rise to a branching inverted tree of moves and countermoves, which helped select the way forward.

AlphaGo Zero, the current version uses a single neural network and a simpler tree search to evaluate moves and positions. The greatest improvement, however, is that the algorithm needs no human input for training. The game, which it plays with itself, starts with simple, untrained, random moves and the positions created are ‘evaluated’. Evaluation results in reinforcing some choices in the random selection and the game is played again. “In each iteration, the performance of the system improves by a small amount, and the quality of the self-play games increases, leading to more and more accurate neural networks and ever stronger versions of AlphaGo Zero,” two of the authors say in a press release. The algorithm hence starts form “tabula rasa”, or a ‘blank slate’, as philosopher John Locke described the mind of an infant, and learns from itself to rapidly become the strongest Go player (and hence teacher) in the world. The method “is no longer constrained by the limits of human,” the press release says.

AlphaGo Zero was hence able to beat AlphaGo 100-0 in a trial, with just 3 days of training, compared to months and on a single machine with 4 specialised neural network chips, against multiple machines and 48 specialised chips. But the real gain is not a better GO player, it is an Artificial Intelligence strategy that would help machines handle complexities of an interconnected world of rising mechanisation and under pressure to optimise energy and resources.

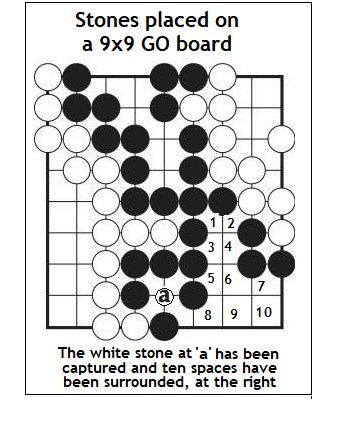

The game of GO

Black and white tokens, called stones, are placed, one in every turn, on the intersections of 9x9, 13x13 or 19x19 lines drawn on a board. The objective is to surround the stones of the opposite colour with one's own stones, which counts as ‘capture’, or to create closed loops of stones, when the number of vacant areas enclosed adds to the player’s score. The total of captures and spaces enclosed is the final score.Players use strategies to maximise the score. For example, a stone may be at risk of capture unless the player occupies the last free intersection. But the player may choose to ‘sacrifice’ the stone for the advantage of progressing a loop in another part of the board. The opponent would then have to choose between the stone offered for capture and preventing the player from stealing a march elsewhere!

The contest rapidly becomes devilishly complex. IBM’s ‘Deep Blue’ succeeded in beating chess world champion, Garry Kasparov in 1996.

But it took twenty years before AlphaGo was developed to beat Lee Sedol.

------------------------------------------------------------------------------------------ Do respond to : response@simplescience.in