Persons who have lost most of their muscle function may still be able to speak, says S.Ananthanarayanan.

Not being able to speak may be worse than other forms of paralysis as it affects the ability to communicate. Affected persons usually devise a way to spell out the words they want to say and are able to stay productive. Patients of strokes or ALS (see box) or other diseases that compromise speech, for instance, may use a keyboard, if it is possible. And when the fingers cannot work a keyboard, there is technology where movement of the pupil of the eye is tracked to identify letters. The patients can then use a computer and the celebrated Stephen Hawking could address audiences with the help of a voice synthesizer.

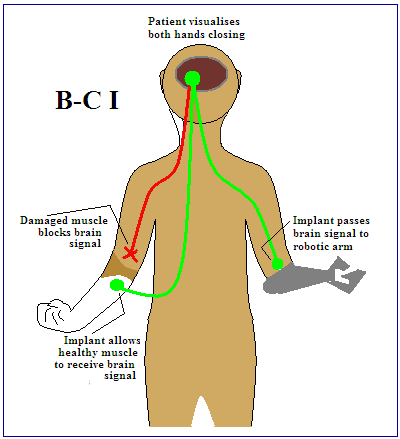

Things get serious when patients lose the ability to move the pupils of the eyes. Even communicating personal needs or a medical condition then become challenges. A technology called Brain-computer Interface, or BCI, could then come to the rescue. This technology was first used to help patients who had lost the ability to work a limb, because a nerve was severed, by connecting the part of the brain that controlled the limb directly to the muscles. If the limb itself had been lost, the signal from the brain could work a robotic limb. The technology was then adapted to read brain signals in the form of letters of the alphabet and display the letters on a screen. A user could then think, or mime the names of letters and type out a message.

These methods, of course, are much slower than normal speech. Spelling out a message at a speed of eight words in a minute is considered good going, but not compared to around 150 words a minute in the case of normal speech. A group working in California, however, reports a breakthrough where brain signals directly produce spoken sentences, without going through the route of spelling and synthesis. Gopala K. Anumanchipalli, Josh Chartier and Edward F. Chang, from the University of California at San Francisco and at Berkley write in the journal, Nature, that they have succeeded in tapping into the nerve signals in the brain, which activate the jaws and the larynx for normal speech, and then to use artificial intelligence to generate audio signals of speech itself.

The reason that AI has to be used is that the sequence through which brain signals are translated into speech is too complex to negotiate by ordinary means. A commentary on the University of California paper says the process “requires precise, dynamic coordination of muscles in the articulator structures of the vocal tract — the lips, tongue, larynx and jaw.” The paper itself says over a hundred muscles are involved and the movements and sounds are not connected in a simple way. In place of working out how all this works in practice, the method used is simply to collect sets of nerve signals and seek patterns in the signals that correspond to components of actual speech. This is the same approach, trial and error, which helps a human infant learn speech through the muscle manipulations that result in different sounds. Whenever a random series of nerve impulses produces a successful result, the pathways followed by impulses get strengthened. Over a period of trials, the feedback due to success ensures that the specific neural network that leads to a given sound is used when that sound is desired.

Artificial Intelligence uses the same framework to ‘train’ a computer to ‘learn’ tasks. This replaces the need to develop complex, and often impossible, computer programs for the same purpose. Typically, a brace of inputs, say nerve signals, result, repeatedly, in random outputs, which generate different sounds. Whenever a correct sound is generated, the probability that particular outputs result from the set of inputs is increased. The outcome, after several trials, is that the combination of outputs that lead to correct sounds, given certain inputs, becomes the most likely, and finally the only ones that are produced. The system could then be said to have learnt to generate specific audio outputs in response to nerve signals that are fed to the system. Based on learning by trial and error, in this way, artificial Intelligence methods have trained computers to carry out complex tasks, play chess, diagnose diseases, make market projections, even drive cars.

In the current work, the researchers used probes to access the brain signals of a set of volunteers. The signals were from the parts of the brain which control speech and the related muscles, and at the time the volunteers spoke different sentences. From the set of brain signals recorded while the different sounds are made, there are methods to go from the signals to synthesizing the same speech. The current group, however, used a two-step method, first estimating movements in the anatomical structures involved in speech, which the signals evoked. This decoding of the brain signals was carried out based on large existing data of the muscle movements associated with known speech recordings. Using this data, the system was trained to associate the anatomical movements associated with brain signals when the volunteers spoke, or even only mimed speech. And then, actual speech was synthesized based on the anatomical movements estimated.

The paper says that testing the intelligibility of the speech produced showed the synthesized speech could be fairly accurately made out. The two-step method used side-stepped the need to collect large brain-signal-to-speech data for training brain signals directly to speech. A significant observation is that the system could be trained even without the subjects having to actually speak, they could just mime speech, to generate the required brain signals. This is significant because it may be possible for the system to help patients of ALS, etc, who have lost the ability to speak, on the basis only of signals in the brain cortex when the patient imagines that she is speaking.

While the paper cautions that the work, so far, is only a ‘proof of concept’, it does point to ways of improving the quality of life of affected persons.

ALS stands for Amyotrphic Lateral Sclerosis. ‘A’ is a Greek prefix for ‘no’, ‘myo’ refers to ‘muscle’, ‘trophic’ means ‘nourishment’. ‘Lateral’ is the part of the spinal cord which controls muscles and movement and ‘sclerosis’ means hardening or damage.

------------------------------------------------------------------------------------------ Do respond to : response@simplescience.in

ALS thus leads to a breakdown of the communication from the brain to the muscles. Patients progressively lose the ability to move, eat, speak and even breathe. There is no cure known so far, but there are drugs that retard the progress of the disease to some degree.-------------------------------------------