How the brain translates sounds, in the form of speech, into meaning, is an enduring mystery. The fields of biology, medicine, mathematics, IT and AI are engaged in unravelling its complexity., says S.Ananthanarayanan.

Yulia Oganian and Edward F Chang, from the Department of Neurological Surgery in the University of California at Los Angeles describe in the journal, Science Advances, their work on how a part of the physical human brain responds to rising volume, or inflexions of loudness, to mark syllables heard in speech. The timing and extent of these changes in the level of sound underlies the coding of speech to meaning, the authors suggest.

The current understanding of the way organisms learn and make decisions is that neural pathways in the brain, or connections between brain cells, are strengthened when specific, but chance responses to stimuli turn out to be pleasant or useful. These responses are then likely to be repeated, and finally they are learnt. This mechanism is simulated in Artificial Intelligence (AI), which enables computers to discover trends in data or develop strategies with greater efficiency than they could by the usual methods of mathematical analyses

For such analyses to be applied to speech and understanding, however, we need to understand how the barrage of electric signals, created by sound waves that strike the ears, are translated in the brain. And in this quest, analyses of the features of the sounds in speech, the sound of the vowels and consonants, patterns in the sounds and the sequences of words, similarities among languages, all become part of the study

Human languages involve complex grammars and large vocabularies. There are hence a great many factors to consider and it is difficult to work out the basic codes into which the brain may split the sounds. Scientists have thus studied the simpler patterns, in the vocalization of birds, which appear to communicate extensively with sound. Repetitive patterns, melodic forms, and even basic grammatical forms have been recognized in birdsong.

While studying dolphins, another species that uses sounds to communicate, it was found that there was a pattern in the variations of the pitch of the sounds, which was heard and remembered as the identity of individual dolphins. The study, in fact, made use of a modern, mathematical method that produces a concise coding of musical compositions of humans, and has helped computers search for instances of plagiarism or copy, over the Internet.

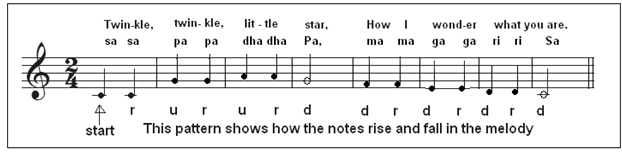

We know that melodies consist of patterns of rise and fall of pitch, along with variations in loudness and time. As there can be a huge number of combinations, the representation of a melody would involve a large collection of data. A simplified coding method is the Parson’s Code, just the series of instances of the pitch rising, falling or staying unchanged. The picture shows the notes that appear in the song, ‘Twinkle, twinkle, little star.’ The notes are shown in the Indian notation and as western staff notation and the Parson’s code, of the rising, falling or steady pitch is also shown, ‘u’ as rise in pitch, ‘d’ as fall and ‘r’ as unchanged:

The code is certainly not a way to record or reproduce a tune, but the code turns out to be unique and a signature for most melodies. Using this code to mark the whistles of Dolphins, scientists were able to identify the signals sent out by each one and they found that the whistles had meaning. One meaning the whistles contained was identity, but as the whistles were unique, there may be more that they convey.

While these and other studies could help understand the nature of language, and meaning, once a pattern of words was there, one question is how the brain makes out the different words, given a continuous stream of sound signals. In computer coding of text, the letters are defined as combinations of ‘0’ and ‘1’, exactly eight characters long and there are specific codes to indicate spaces and full stops. In the genetic code in DNA, again, there are specific molecule combinations to mark the beginning and end of the coding for each protein. But how is the brain able to tell, from the stream of electrical impulses that it receives, that this is the end of one word and the beginning of the next?

The authors of the paper in Science Advances saw that there was a possibility of experiments with a collection of patients who were undergoing brain surgery at the Epilepsy Centre of the University. In preparation for surgery, the patients had arrays of electrodes attached to the brain surface, so that surgeons could map brain activity and assess what parts of brain tissue it would be necessary and safe to excise. As the electrodes were in place, the University of California team was able to record data of brain responses to sounds heard by patients who volunteered.

The trials were conducted with eleven patients where the electrodes were place at areas associated with speech processing. The brain activity at these centres were then recorded while the volunteers listened to different speech recordings that were played to them. The result was a mass of data of the electrical activity of nerve cells in the brain in response to changing syllabic pattern and the varying loudness that we encounter in normal speech

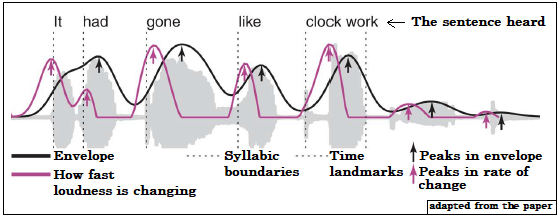

“The most salient acoustic features in speech are the modulations in its intensity, captured by the amplitude envelope,” the authors say. The amplitude envelope is outline of the variation in loudness of speech, as seen in a graphical representation, as shown in the picture.

The grey part of the picture is the sound energy, furthest from the baseline when the sound is louder. The black line is the outline, or the envelope. But the rate of getting louder, the purple line, is not the highest at the point when the sound is the loudest (in fact, the loudness starts falling once the loudest point is reached). The fastest increase is earlier, when the sound is feeble but rising.

The envelope thus shows the timing of the emphasis in the speech, as at the beginning of the words in the sentence, “It had gone like clock-work.” The words are separated and the places where the speaker places emphasis are marked. The paper says that when synthesized sound was used, it was seen that faster increase in loudness led to greater neuronal activity, rather than loudness.

The result indicates that the brain uses, and this may be a learnt behavior, features in the stream of data that it receives to separate the words and the places of stress, to differentiate between different sounds and hence the meaning. We are all familiar with how changing the point of stress in a sentence (like the place where a comma appears) can change the meaning of what is conveyed. In the Chinese language (and there may be others) the very meaning of words changes if the tone is rising, falling or both or flat!

------------------------------------------------------------------------------------------ Do respond to : response@simplescience.in-------------------------------------------